The New Energy + AI Industrial Complex

Building the Backbone of the Next Economy

I’ve been obsessed with the energy equation—not in the abstract sense of electricity, but in the hard realities of what needs to be true for AI to reach its potential. And one fact keeps coming back to me: power scarcity in the U.S. is real, and it’s on a collision course with AI’s exponential growth curve.

We talk endlessly about compute capacity, model architecture, and data availability. But all of that presumes something more fundamental: that the lights stay on, the voltage stays steady, and the grid can deliver. Without that, AI’s theoretical capacity is just that—theoretical.

As I’ve read more about this, it’s become clear that we’re in the middle of a new industrial convergence: the digital and the electrical systems are fusing into a single strategic map. This map has five main pillars—grid infrastructure, clean baseload generation, storage and resilience, compute efficiency, and architectural design. Together, they form what I call The New Energy–AI Industrial Complex. The companies that understand this ecosystem—and secure their place within it—will define the next decade of AI leadership.

The Arteries of the AI Age: Grid Infrastructure

The grid is the circulatory system of the AI economy. No matter how powerful the heart, nothing flows without clear, unobstructed arteries. In this case, those arteries are high-voltage transmission lines, substations, and underground connections—and they are under unprecedented strain.

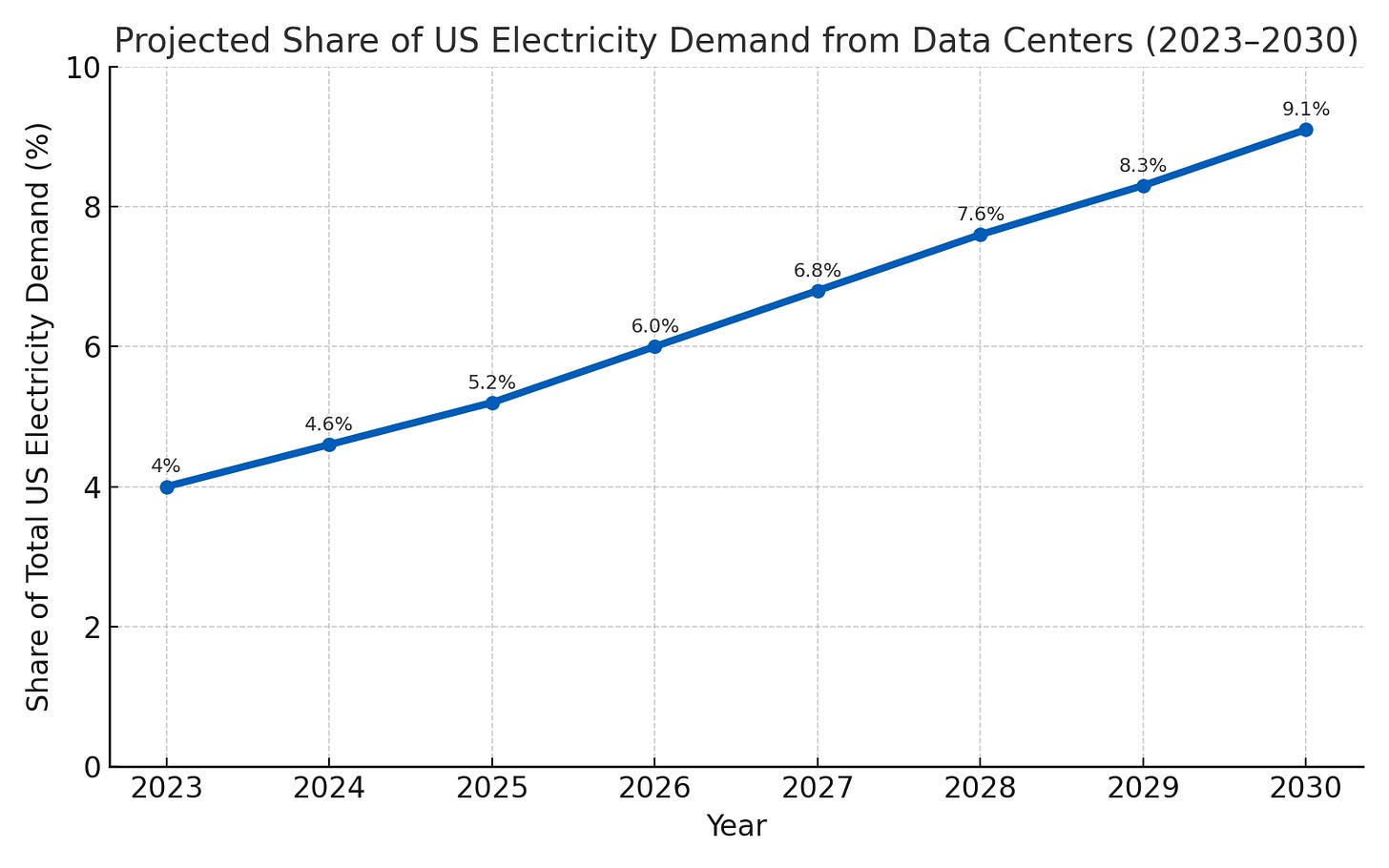

The scale of this challenge is staggering. The U.S. Department of Energy and Lawrence Berkeley National Laboratory now project that electricity usage from data centers will at least double, potentially triple, by 2028. This is a dramatic shift from the flat demand the grid has seen since 2007, with AI alone expected to account for 30–40% of all new electricity demand through 2030.

In July 2024, Northern Virginia—home to the largest cluster of data centers on Earth—narrowly avoided disaster. A transmission fault briefly took 60 data centers offline, pushing them to diesel backup. The sudden drop in demand risked a cascading outage across the entire grid. Dominion Energy now faces over 40 GW in pending data center load requests, roughly equal to California’s peak summer demand.

New high-voltage transmission lines in the U.S. take 7–10 years to build, bogged down in permitting, lawsuits, and environmental review. In contrast, China deploys ultra-high-voltage lines in a fraction of that time, connecting its renewable-rich western provinces to industrial hubs in the east—a speed advantage with clear implications for its AI ambitions.

Quanta Services (PWR) is one of the few companies with the capacity and expertise to execute large-scale builds like the 550-mile SunZia Transmission and Wind Project, which took 17 years to approve. These timelines illustrate the gap between AI’s urgency and infrastructure’s reality.

The Steady Hand: Clean Baseload Generation

AI’s demand curve is unlike almost any other—flat, relentless, and intolerant of interruptions. That’s why baseload generation—power available every hour of every day—is the unglamorous but essential foundation.

Big tech is no longer waiting for the grid to solve this. Amazon’s move to colocate with a Pennsylvania nuclear plant locks in guaranteed megawatts, independent of grid congestion. In Texas, some AI campuses are installing on-site natural gas turbines—trading carbon intensity for control. The choice of baseload source is a climate inflection point. Meeting demand with gas or coal will raise emissions. Meeting it with nuclear, hydro, or geothermal keeps AI growth aligned with decarbonization goals.

NuScale, TerraPower, and Westinghouse are leading the charge in small modular reactors (SMRs)—50–300 MW nuclear units that can be sited next to data centers. Standardized designs make them easier to permit and finance. TerraPower’s advanced load-following capabilities allow for seamless integration with intermittent renewables. In May 2025, the Nuclear Regulatory Commission approved NuScale’s SMR design—an important precedent for the sector.

Buying Time: Storage & Resilience

Even the best generation and transmission plans can fail. Storage buys you time—time to ride out a transmission fault, a renewable lull, or a price spike in the energy market. And it’s not just about minutes or hours anymore. Long-duration storage is becoming critical. Form Energy’s iron-air batteries, with up to 100 hours of discharge, could keep a facility running through multi-day outages. Tesla’s Megapacks already help data centers shave peak demand charges while serving as emergency backup.

Policy is enabling this market. FERC Order 841 opened wholesale markets to storage, unlocking an estimated 50 GW of potential capacity in the U.S. globally, countries like Australia, South Korea, and Germany are moving faster, deploying large-scale batteries and experimenting with hydrogen storage.

The first company to crack cost-effective, long-duration storage will hold a strategic choke point in the AI power stack.

Making Every Watt Count: Compute Efficiency

In a power-constrained world, efficiency is a growth multiplier. Every performance-per-watt gain extends the life of existing infrastructure, acting as a “virtual power plant.”

NVIDIA’s latest GPUs deliver significantly more compute per watt than their predecessors—effectively adding capacity without new grid connections. AMD’s MI300 accelerators and Cerebras’s wafer-scale processors pursue similar gains. Cerebras’s architecture, which eliminates chip-to-chip communication delays, can slash power use for certain AI workloads.

Efficiency is also a climate lever. Every watt saved reduces strain on grids and the need for carbon-intensive generation. The EU’s Energy Efficiency Directive will force transparency on data center energy use per workload; while U.S. rules lag, investor scrutiny is rising.

Chinese players like Biren and Huawei are also targeting efficiency as a differentiator. Closing the performance-per-watt gap could let them compete globally on both cost and carbon intensity.

Design as Strategy: Architectural Innovation

Data center design is where the upstream investments—in generation, storage, and efficiency—either compound or dissipate. Digital Realty has achieved a PUE below 1.2 in its Northern European modular builds by integrating rooftop solar, on-site batteries, and liquid cooling. Equinix is experimenting with seawater cooling in Singapore and hydrogen fuel cells in the UK, while reaching 96% renewable energy coverage globally.

Cooling remains a critical front. It can account for 30–40% of a data center’s total energy draw. Moving to liquid immersion cooling and locating in cooler climates can dramatically cut that load. In water-scarce regions like the U.S. Southwest, operators are being forced toward air-based or closed-loop systems.

The pillars of the New Energy–AI Industrial Complex are deeply interdependent. An SMR breakthrough could unlock new geographies for AI campuses. A leap in chip efficiency could delay the need for new transmission. An architectural innovation could change the economics of site selection.

AI strategy is now inseparable from energy strategy. Whether you own, lease, or partner for capacity, your moat may increasingly be measured in megawatts, not patents.